Long-term memory for audio-visual events

| Workgroup | Realistic depictions |

| Duration | 01/2016-03/2022 |

| Funding | Budget resources |

Project description

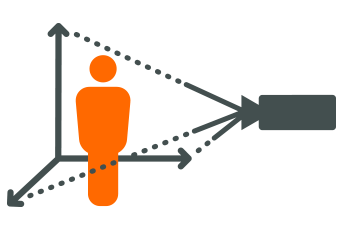

In most situations, human perception combines information from different modalities. Whereas recent research has highlighted that information streams from different modalities are capable of influencing each other, the formation of long-term memory representations from different modalities remains understudied. The central discussion within this field addresses the question whether information from different sensory modalities are integrated with each other and result in a joint representation (integration) or whether the distinct modalities are encoded and stored independently of each other (dual coding).

Our results in this project showed that it is impossible to simply transfer theories and findings from perception research to memory research. Whereas temporal synchrony plays a dominant role during multimodal perception, it is only of minor relevance with regard to memory. Instead, semantic congruency between the different modalities plays the dominant role for the formation of long-term memory representations. Within this project, our research aimed at identifying the cognitive mechanisms behind this pattern of results.

Cooperations

PD Dr. Markus Huff, Deutsches Institut für Erwachsenenbildung, Bonn, Germany